How will a social media ban impact Australian schools?

by

Bec May

In a world-first move, the Australian government, with the support of the coalition, passed a bill on 28th November 2024, banning young children and people under 16 from using social media platforms, including TikTok, Facebook, Snapchat, Reddit, X, and Instagram. The law places responsibility on the platforms to enforce these restrictions with fines of up to AUD 50 million if they fail to prevent children younger than 16 from holding accounts.

Despite Prime Minister Anthony Albanese stressing that the onus to enforce this will lie with the social media companies themselves, schools will inevitably bear some responsibility for managing the ban's broader implications.

Already tasked with addressing issues like cyberbullying, exposure to harmful content, and online safety education, schools must now navigate additional challenges posed by this legislation; from monitoring students online to bridging gaps in digital literacy, the success of the ban will somewhat hinge on how effectively schools can adapt and implement supportive technologies and strategies. This raises a crucial question for educators: How can schools meet these demands while preparing teens for the realities of the digital world? While restrictions can shield students during their school years, it is equally important to equip them with critical thinking, resilience, and responsible online behaviours to navigate the complexities of the online world independently.

Social media and young people's mental health

At the heart of this new legislation is a pressing concern: the profound impact of social media on young people's mental health.

Shadow communications minister David Coleman underscored this issue when interviewed by Sky News, stating, "What other generation in history has grown up being exposed to as much damaging content as this generation? [We can] divert our eyes from that and not talk about it, or we can stare it in the face, acknowledge it, and do something about it."

How harmful online content is shaping the lives of young Australians

The detrimental effects of social media are well-documented by mental health experts, with research confirming that adolescents who spend significant time on social media are at a higher risk of mental health struggles and disrupted sleep patterns. Platforms such as TikTok and Instagram expose teens to harmful content, ranging from the toxic masculinity promoted by figures like Andrew Tate to the idealised, curated, and largely unachievable lifestyles showcased by influencers.

This environment creates relentless pressure for young Australians to conform to online standards, eroding their sense of self-worth and creating a feedback loop of distress. A 2022 UK parliamentary report revealed that 40 % of young girls feel worse about their appearance after using social media. Similarly, educators and parents have reported an uptick in aggressive behaviour among boys, attributing this largely to the influence of content like Tate's, which normalises misogyny and hyper-masculinity.

Addressing cyberbullying and online dangers

Cyberbullying is perhaps of greater concern, with a 2021 study by the eSafety Commissioner revealing that one in five Australian teens have experienced severe emotional distress as a result of online bullying. This phenomenon is primarily facilitated by social media channels, which, by their very nature, allow for the rapid dissemination and deletion of content, enabling bullies to post harmful material. This capability allows bullies to post harmful material and remove it quickly, complicating efforts by schools and caregivers to document and respond to such incidents effectively.

Dr Danielle Einstein, clinical psychologist and author, believes the ban may help schools address these challenges by shifting the focus back to fostering face-to-face interactions. “Teachers are under so much pressure to solve the fact that the culture has been undermined by social media, by this sort of mean behaviour that subtly is being permitted to exist, just because it's so hard to stop”. By limiting the influence of these platforms, Einstein suggests, schools may have a better chance to rebuild interpersonal connections and teach students healthier ways to resolve conflicts.

For advocates like Sonya Ryan, whose 15-year-old daughter was tragically murdered by an online predator, the legislation represents a monumental step forward. "It's too late for my daughter Carly and the many other children who have lost their lives in Australia, but let us stand together on their behalf and embrace this together," said Ryan.

The argument against banning under 16s on social media

Critics of the legislation caution that banning might isolate children and young people or limit their access to supportive online communities, especially the LBGTQI+ community who rely heavily on these platforms to connect with peers, access vital resources, and find affirming spaces that may not be available to them in their offline lives. There are also concerns that people below the minimum age will be hesitant to report harm experienced on these sites for fear of rebuke. Pastoral care teams will need to step in by directing students to age-appropriate online resources and ensuring in-person support systems are in place, helping to replace the sense of community that may be lost due to the new laws.

On the flip side, proponents of the ban argue that the benefits far outweigh the risks and that this world-first law will foster a safer digital environment for Australia's youth.

Undoubtedly, there are challenges ahead for the government, social media companies, schools, education services, mental health advocates, and parents alike. For the legislation to succeed, it must look at the core issues. Without safeguards to monitor exempted platforms and prevent young people from migrating to less regulated digital spaces, the risks may simply shift rather than diminish. Ongoing vigilance and adaptive measures will be crucial in protecting Australian children, ensuring that this online safety amendment leads to lasting and meaningful change.

How will schools enforce a social media ban? Challenges in implementation

"The social media ban legislation has been released and passed within a week and, as a result, no one can confidently explain how it will work in practice – the community and platforms are in the dark about what exactly is required of them,” DIGI managing director Sunita Bose

While there is no doubt this legislation is crafted with good intentions, its success will depend on effective enforcement and collaboration with social media platforms. To tackle this, the government has allocated $6.5 to test various age verification technologies, including biometric checks, Digital ID Systems, and facial analysis, all of which aim to reliably identify underage users and enforce the ban platform-side.

AI-driven tools like AgeID and Yoti, which are currently being trialled internationally, already show promise in estimating users' ages. However, they remain in the experimental stages, and integrating these systems into existing infrastructure poses significant challenges. Ensuring their functionality across school-managed networks and personal devices adds another layer of complexity.

Beyond technical hurdles, these measures raise serious concerns about privacy, practicality, and scalability. Questions remain about how these systems will protect user data, avoid overreach, and operate seamlessly across a range of devices and operating systems.

Ultimately, while advanced assurance technologies will be essential for enforcement, their implementation must balance effectiveness and user privacy.

Students finding workarounds: The unintended consequences of a social media ban

Historically, children have demonstrated a knack for bypassing restrictions. One only has to think back to Leisure Suit Larry's infamous age gate, where trivia questions intended to block underage players were easily circumvented. There are concerns among the academic community that a similar pattern may emerge in response to this social media account ban. Professor Barney Tan cautions that “Without stringent age verification mechanisms and industry cooperation, children may find ways to circumvent these restrictions.”

One key concern is that young people might be driven to less regulated or more dangerous online spaces, such as the dark web, where harmful content is even more difficult to monitor and control. The persistence of banned content, such as Tate's toxic masculinity narratives, underscores this challenge. Despite Tate being banned from most major platforms, including TikTok, Instagram, and Youtube, due to violating policies on hate speech and promoting dangerous organisations, Tate's material remains accessible via fan accounts and lesser-known platforms—demonstrating how restrictions can actually shift rather than eliminate the problem. Likewise, following the shutdown of Omegle, a platform notorious for facilitating unsafe interactions, users have sought alternative avenues to continue similar activities, with platforms such as Chatroulette, Chatrandom, and Shagle experiencing increased traffic in Omegle’s absence.

Another concern is the growing use of Virtual Private Networks (VPNs) among teens, who will likely exploit this loophole to bypass the ban. VPNs allow users to mask their location and access restricted content, making it difficult for platforms and authorities to enforce age restrictions effectively. A 2023 eSafety survey revealed that 34% of Australian teens already use VPNs to circumvent online barriers, with this number expected to grow as digital restrictions tighten.

To address these risks, robust monitoring tools and a focus on digital literacy will be essential in Australian schools, ensuring students can navigate the online world safely and responsibly.

A unified approach: Legislation and technology to improve online safety

While the government hopes this new legislation will place accountability on social media platforms, schools have an ongoing responsibility to safeguard the welfare of their students in the online space.

While this responsibility may not yet be legislated as it is in the UK, Australian schools have a duty of care to monitor and address risks associated with digital interactions. Failure to act leaves schools vulnerable to liability and risks eroding trust among parents and the wider community.

Australian teens already consider schools the most important online safety information source. A recent eSafety survey revealed that 43% of teens found their school to be the most trusted channel for online safety information, ahead of trusted eSafety websites and parents/carers.

However, many schools lack the tools needed to effectively monitor and manage online activity within their networks. Without such capabilities, risks like cyberbullying, exposure to harmful content, and the misuse of VPNs to bypass restrictions usually go unnoticed. This is where proactive solutions like Fastvue Reporter play a crucial role, enabling schools to bridge the gap between policy and practice.

Fastvue offers schools a comprehensive solution to monitor and address risks of online harms in real time, aligning with the evolving demands of the new legislation. By providing actionable insights into student online behaviour, Fastvue equips educators with the tools to detect issues early and intervene before problems escalate.

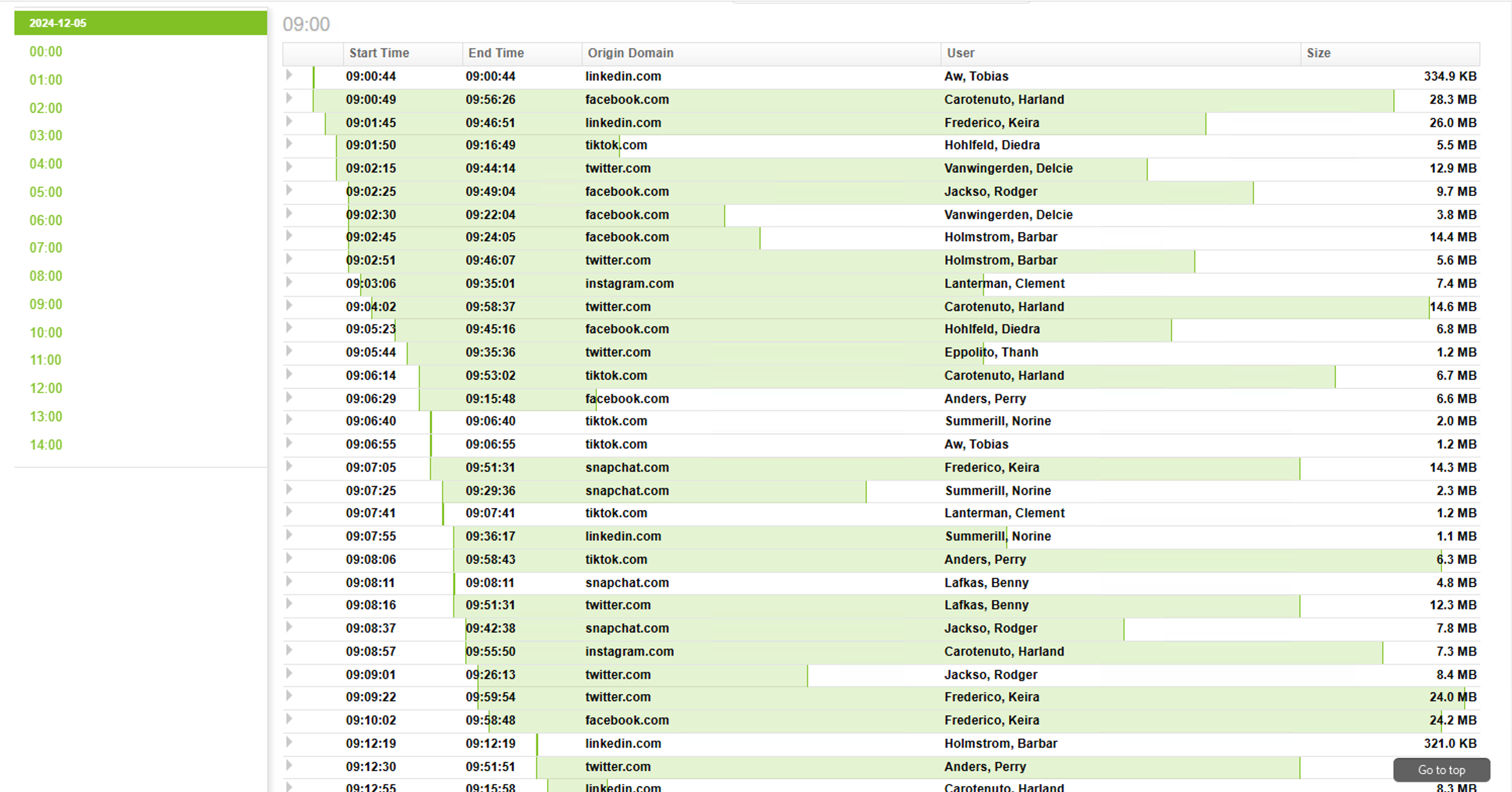

Monitoring social media use in schools

As schools begin to address the challenges that arise from the social media ban, effective monitoring of online activity will be more important than ever. Addressing concerns such as cyberbullying, exposure to harmful content and students attempting to bypass restrictions requires robust tools that provide comprehensive network visibility and actionable insights.

By offering real-time monitoring, keyword detection, and detailed reporting, tools like Fastvue Reporter empower schools to proactively identify and address online risks, fostering a safer and more supportive digital environment.

Monitor your network for:

Suicide and Self-harm

Extremism & Radicalisation

Bullying & Violence

Child Sexual Exploitation & Illegal Content

Cyber Threats

Bandwidth Issues

Application Use

While a significant step forward, the social media ban is not a standalone solution. Platforms will require time to implement verification technologies, and students may still find ways to access social media outside school hours. Schools must, therefore, adopt robust tools and safeguarding strategies to ensure a safer and more supportive digital environment, leveraging solutions like Fastvue to turn this challenge into an opportunity for leadership in online safety.

Fastvue integrates with global leading firewalls, allowing schools to align with legislative requirements and lead in creating secure, digitally literate environments for students. As the social media ban takes effect, such tools will become even more essential in ensuring technology serves as a positive force in education.

Ready to learn more? Book a demo with one of the Fastvue team to discuss how our education solution can support your school's needs.

Take Fastvue Reporter for a test drive

You can’t put a price on safer internet use. Download our FREE 14-day trial, or schedule a demo and we'll show you how it works.

- Share this storyfacebooktwitterlinkedIn